本文最后更新于2025-08-01,距今已有 173 天,若文章内容或图片链接失效,请留言反馈。

写在最前

1. 前置配置

参考之前的部署流程前置配置,最后的离线部署kubesphere有幸得到了运维有术大佬的技术支持,非常感谢大佬!

1.1 systemd 管理内核参数与模块

在麒麟系统中,直接在 /etc/sysctl.d/ 和 /etc/sysconfig/modules/ 中进行配置,重启后可能会丢失。因此,建议使用 systemd 来管理开机自启动,以确保配置持久生效。

cat > /etc/systemd/system/k8s-modules.service <<EOF

# 描述:开机自动加载 Kubernetes 所需内核模块,并自动启动 sysctl-k8s 服务

[Unit]

Description=Load Kubernetes required kernel modules

# 保证在网络初始化之前加载内核模块

Before=network-pre.target

# 希望 network-pre.target 启动,也希望自动启动 sysctl-k8s.service

Wants=network-pre.target sysctl-k8s.service

# 关闭默认依赖,手动控制依赖关系顺序

DefaultDependencies=no

[Service]

# 一次性任务,执行完即退出

Type=oneshot

# 加载k8s需要用到的内核模块

ExecStart=/etc/sysconfig/modules/ipvs.modules

ExecStart=/etc/sysconfig/modules/br_netfilter.modules

# 执行完成后服务状态保持激活

RemainAfterExit=yes

[Install]

# 系统进入多用户运行级别时自动启动

WantedBy=multi-user.target

EOF

cat > /etc/systemd/system/sysctl-k8s.service <<EOF

[Unit]

Description=应用 Kubernetes 所需的 sysctl 内核参数(适配麒麟系统)

# 确保该服务在网络初始化前执行,以便内核参数(如 ip_forward)及时生效

Before=network-pre.target

Wants=network-pre.target

# 确保内核模块先加载,避免 sysctl 参数路径不存在

After=k8s-modules.service

Requires=k8s-modules.service

# 禁用默认依赖,防止 systemd 自动附加不必要的依赖链,确保尽早启动

DefaultDependencies=no

# 关于为什么不用 sysctl --system:

# sysctl --system 会加载多个路径的 *.conf 配置文件

# 但其依赖 systemd-sysctl.service 来实现自动执行,而麒麟系统中该服务可能未启用或执行顺序靠后

# 导致某些关键参数(如 ip_forward)在 kubelet 或网络插件启动前未生效

# 所以使用 systemd 单独编排此 service 是更稳定可靠的做法

[Service]

Type=oneshot

# 加载我们自定义的 Kubernetes 所需 sysctl 配置

ExecStart=/usr/sbin/sysctl -p /etc/sysctl.d/k8s.conf

RemainAfterExit=true

[Install]

# 设置为在多用户模式下自动启动,确保开机时加载

WantedBy=multi-user.target

EOF

2. 依赖配置

2.1 准备安装包

到阿里云开一台倚天ARM服务器,大概一小时2毛钱左右。

# 准备清华大学kubernetes源

cat > /etc/yum.repos.d/kubernetes.repo <<EOF

[kubernetes]

name=kubernetes

baseurl=https://mirrors.tuna.tsinghua.edu.cn/kubernetes/yum/repos/kubernetes-el7-$basearch

name=Kubernetes

baseurl=https://mirrors.tuna.tsinghua.edu.cn/kubernetes/core:/stable:/v1.30/rpm/

enabled=1

gpgcheck=1

gpgkey=https://pkgs.k8s.io/core:/stable:/v1.30/rpm/repodata/repomd.xml.key

# [cri-o]

# name=CRI-O

# baseurl=https://mirrors.tuna.tsinghua.edu.cn/kubernetes/addons:/cri-o:/stable:/v1.28/rpm/

# enabled=1

# gpgcheck=1

# gpgkey=https://pkgs.k8s.io/addons:/cri-o:/prerelease:/main/rpm/repodata/repomd.xml.key

EOF

# 更新yum

yum clean all

yum makecache

yum update

# 下载指定版本rpm依赖包

mkdir -p /app/k8s-install && cd /app/k8s-install

yumdownloader --destdir=./ kubeadm-1.30.6 kubectl-1.30.6 kubelet-1.30.6

yumdownloader --destdir=./ cri-tools-1.30.6

yumdownloader --destdir=./ kubernetes-cni

[root@iZwz98idus54zis8nd1e2nZ k8s-install]# ll

total 47304

-rw-r--r-- 1 root root 7782344 Aug 6 2024 cri-tools-1.30.1-150500.1.1.aarch64.rpm

-rw-r--r-- 1 root root 9042164 Oct 23 2024 kubeadm-1.30.6-150500.1.1.aarch64.rpm

-rw-r--r-- 1 root root 9367248 Oct 23 2024 kubectl-1.30.6-150500.1.1.aarch64.rpm

-rw-r--r-- 1 root root 15862040 Oct 23 2024 kubelet-1.30.6-150500.1.1.aarch64.rpm

-rw-r--r-- 1 root root 6360596 Apr 18 2024 kubernetes-cni-1.4.0-150500.1.1.aarch64.rpm

# 下载containerd

mkdir -p /app/k8s-install/containerd && cd /app/k8s-install/containerd

wget https://github.com/containerd/containerd/releases/download/v1.7.3/cri-containerd-1.7.3-linux-arm64.tar.gz

# 下载runc

mkdir -p /app/k8s-install/containerd/runc && cd /app/k8s-install/containerd/runc

wget https://github.com/opencontainers/runc/releases/download/v1.1.5/libseccomp-2.5.4.tar.gz

wget https://github.com/opencontainers/runc/releases/download/v1.1.9/runc.arm64

# 打包并下载到window本地

cd ../

tar -cf k8s-install.tar.gz k8s-install

sz2.3 镜像准备

通过命令得到所有镜像,使用我之前分享的脚本推送到私有仓库,记得将脚本的架构配置成为IS_ARM64=true

# 建议给harbor多加一级的路径,方便后续通过harbor镜像复制到不同的环境只复制arm64下面就全复制了。 harbor.tanqidi.com/k8s/arm64

[root@iZwz98idus54zis8nd1e2nZ app]# kubeadm config images list --kubernetes-version=v1.30.6

registry.k8s.io/kube-apiserver:v1.30.6

registry.k8s.io/kube-controller-manager:v1.30.6

registry.k8s.io/kube-scheduler:v1.30.6

registry.k8s.io/kube-proxy:v1.30.6

registry.k8s.io/coredns/coredns:v1.11.3

registry.k8s.io/pause:3.9

registry.k8s.io/etcd:3.5.15-0

# 我已经试过安装 Calico 网络插件的 v3.26.1 版本,知道它需要以下这些镜像。

quay.io/tigera/operator:v1.30.4

docker.io/calico/typha:v3.26.1

docker.io/calico/kube-controllers:v3.26.1

docker.io/calico/cni:v3.26.1

docker.io/calico/node:v3.26.1

docker.io/calico/pod2daemon-flexvol:v3.26.1

docker.io/calico/apiserver:v3.26.1

docker.io/calico/csi:v3.26.1

docker.io/calico/node-driver-registrar:v3.26.1

# 如果我的私有仓库是 harbor.tanqidi.com/k8s/arm64,那么镜像会自动推送到类似 harbor.tanqidi.com/k8s/arm64/kube-apiserver:v1.30.6 的路径。重复这个步骤,直到所有需要的镜像都下载并推送到私有仓库中,然后就可以进入部署环节了。

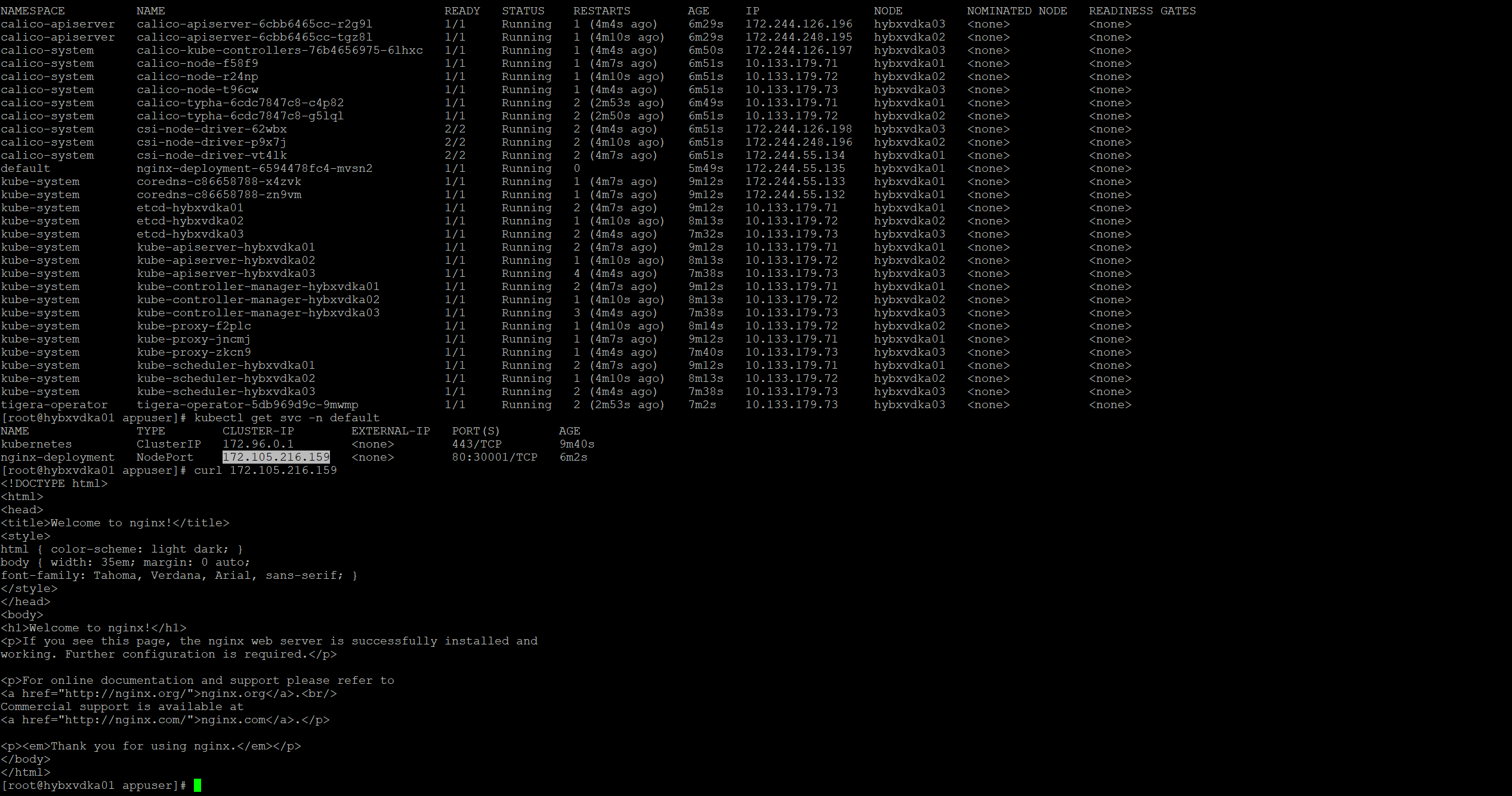

./push registry.k8s.io/kube-apiserver:v1.30.63. 部署 k8s 集群

3.1 rpm安装

优先使用离线包(RPM)来安装,因为一般内网机器都会配置私有的 YUM 源,通常能够直接安装。如果遇到缺失的情况,可以继续按照上面的步骤,到倚天 ARM 服务器上补全下载。

# 上传解压并安装,缺少依赖就自行yum install来解决一下。

rpm -ivh xxxxxx.rpm3.2 开始初始化

参考之前的篇章我使用了haproxy,这里就配置成为16443

kubeadm init \

--control-plane-endpoint "vip.k8s.local:16443" \

--upload-certs \

--apiserver-advertise-address=10.133.179.71 \

--apiserver-bind-port=6443 \

--pod-network-cidr=172.244.0.0/16 \

--service-cidr=172.96.0.0/12 \

--image-repository=harbor.bx.tanqidi.com/k8s/arm64 \

--kubernetes-version=1.30.6 \

--node-name=hybxvdka01

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join vip.k8s.local:16443 --token 0zyozq.ssfzlduenow4csxw \

--discovery-token-ca-cert-hash sha256:9359d7ec5175c1863072c1ac60d0f0d8840c1fefae1d72dfc08ef82abd28d322 \

--control-plane --certificate-key 38a2a43ff87b088d813c9faf70d9c23098509e6ec1f78177cb89bd105bb47d64

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join vip.k8s.local:16443 --token 0zyozq.ssfzlduenow4csxw \

--discovery-token-ca-cert-hash sha256:9359d7ec5175c1863072c1ac60d0f0d8840c1fefae1d72dfc08ef82abd28d322

4. 部署网络插件

参考上一篇张来部署,此处我选择IPIP网络模式。

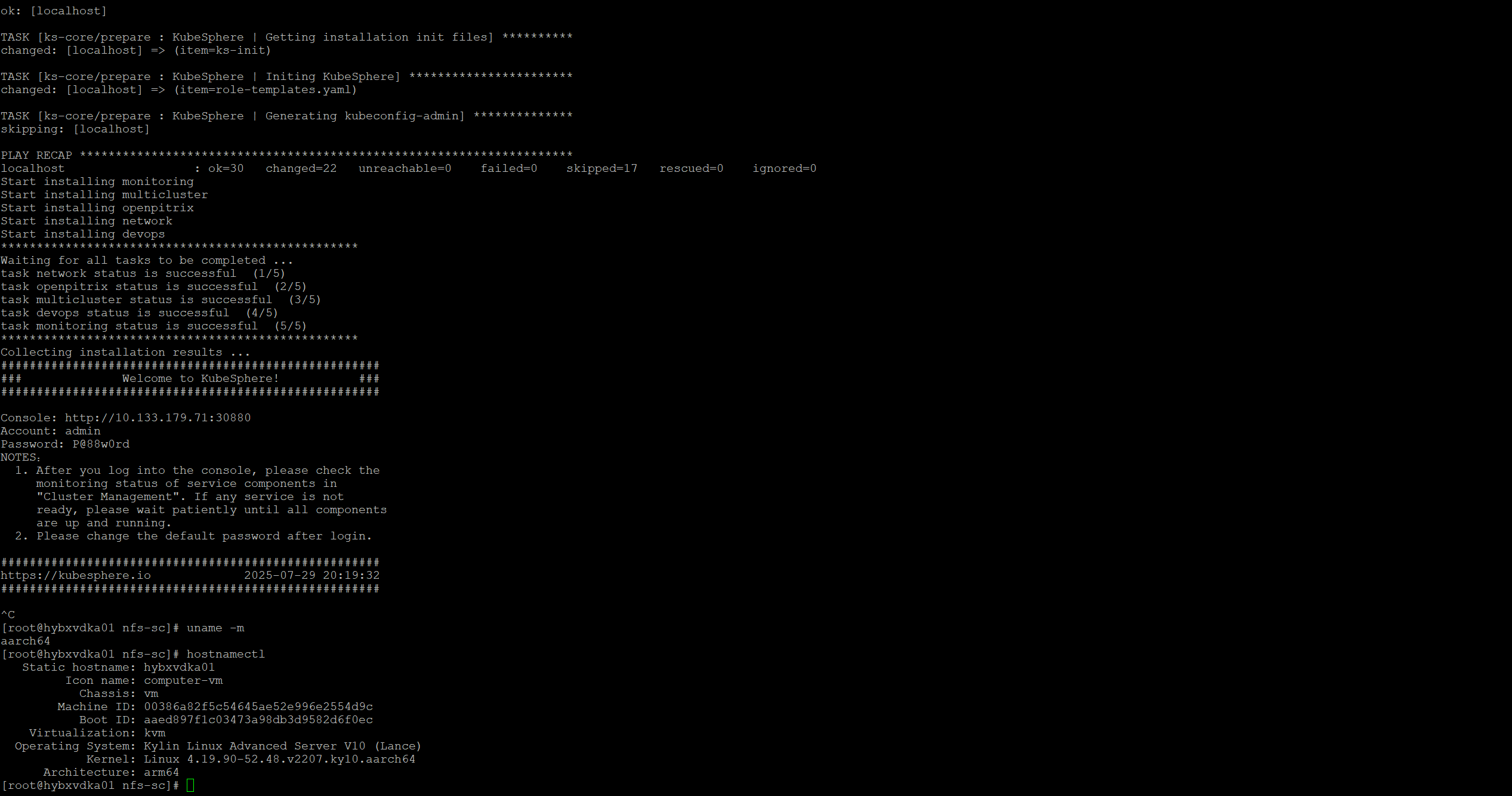

5. 离线部署kubesphere

5.1 收集镜像

kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.4.1/kubesphere-installer.yaml

kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.4.1/cluster-configuration.yaml5.2 异常处理

推荐kubesphere/ks-installer:v3.4.1-patch.0新镜像部署,修改kubesphere-installer.yaml,如果已经部署好了可以参考官方帖子来修复。

麒麟 arm64 使用 Kubeadm 离线部署 Kubernetes v1.30.x 部署流程

https://tanqidi.com/archives/73a9f41e-a9f1-4d16-9fb1-1055cf010cf8

评论