1. 前置配置

在某些系统中,内核参数和模块可能会出现开机未自动加载的情况。针对这种情况,建议使用 systemd 来进行管理,确保系统在启动时能够正确加载所需的内核参数和模块。

1.1 配置主机名

hostnamectl set-hostname k8s-master01

hostnamectl set-hostname k8s-worker01

hostnamectl set-hostname k8s-worker021.2 配置hosts

172.31.0.111 k8s-master01

172.31.0.112 k8s-worker01

172.31.0.113 k8s-worker021.3 防火墙配置

systemctl disable firewalld && systemctl stop firewalld

systemctl disable iptables && systemctl stop iptables

systemctl status firewall

systemctl status iptables 1.4 升级内核

如果内核比较低可以按照这个步骤来升级到比较新的内核

1.5 SELINUX配置

setenforce 0

sed -ri 's/SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config

sestatus

reboot1.6 配置内核转发及网桥过滤

# 添加网桥过滤及内核转发配置文件

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

vm.swappiness = 0

EOF

# 加载br_netfilter模块

modprobe br_netfilter

sysctl -p /etc/sysctl.d/k8s.conf

# 查看是否加载

lsmod | grep br_netfilter

br_netfilter 22256 0

cat > /etc/sysconfig/modules/br_netfilter.modules<<EOF

#!/bin/bash

modprobe br_netfilter

EOF

chmod 755 /etc/sysconfig/modules/br_netfilter.modules

1.7 安装ipset及ipvsadm

yum -y install ipset ipvsadm

# 配置ipvsadm模块加载方式,添加需要加载的模块

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules

授权、运行、检查是否加载

[root@k8s-master01 ~]# bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack

ip_vs_sh 16384 0

ip_vs_wrr 16384 0

ip_vs_rr 16384 0

ip_vs 155648 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack 147456 2 xt_conntrack,ip_vs

nf_defrag_ipv6 24576 2 nf_conntrack,ip_vs

nf_defrag_ipv4 16384 1 nf_conntrack

libcrc32c 16384 3 nf_conntrack,xfs,ip_vs

1.8 关闭SWAP分区

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab

echo "vm.swappiness=0" >> /etc/sysctl.conf

sysctl -p

2. 部署 containerd

2.1 下载安装包

mkdir -p /tmp/k8s-install/ && cd /tmp/k8s-install

wget https://github.com/containerd/containerd/releases/download/v1.7.3/cri-containerd-1.7.3-linux-amd64.tar.gz

# 解压到根/目录他会自动来到/usr/local/bin/

tar xf cri-containerd-1.7.3-linux-amd64.tar.gz -C /

# 生成config.toml

mkdir -p /etc/containerd/ && containerd config default > /etc/containerd/config.toml

# 编辑/etc/containerd/config.toml由3.8修改为3.9

sandbox_image = "registry.k8s.io/pause:3.8" 为registry.aliyuncs.com/google_containers/pause:3.9

# 查看

[root@k8s-master01 tmp]# cat /etc/containerd/config.toml |grep pause:

sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.9"

# containerd 启动!

systemctl enable --now containerd2.2 更新 runc

mkdir -p /tmp/k8s-install/runc && cd /tmp/k8s-install/runc

wget https://github.com/opencontainers/runc/releases/download/v1.1.5/libseccomp-2.5.4.tar.gz

tar xf libseccomp-2.5.4.tar.gz

cd libseccomp-2.5.4/

# 需要前置安装这个

yum install gperf -y

# 如果执行./configure =报错需要安装yum -y groupinstall "Development Tools" 或者只装gcc和make yum -y install gcc make

./configure

make && make install

find / -name "libseccomp.so"

cd /tmp/k8s-install/runc

wget https://github.com/opencontainers/runc/releases/download/v1.1.9/runc.amd64

chmod +x runc.amd64

mv runc.amd64 /usr/local/sbin/runc

# 执行runc命令,如果有命令帮助则为正常

runc2.3 配置代理

由于无法直接访问 docker.io 等公共镜像源,我们通过代理加速拉取镜像。同时配置 no_proxy 忽略内网关键网段,避免影响 Kubernetes 集群正常通信。

mkdir -p /usr/lib/systemd/system/containerd.service.d

cat > /usr/lib/systemd/system/containerd.service.d/http-proxy.conf<<EOF

[Service]

Environment="HTTP_PROXY=http://172.31.0.1:7890"

Environment="HTTPS_PROXY=http://172.31.0.1:7890"

Environment="NO_PROXY=localhost,127.0.0.1,::1,10.0.0.0/8,172.16.0.0/12,192.168.0.0/16,.svc,.cluster.local"

EOF

2.4 配置非HTTPS仓库

有时候我们的内网私有仓库只提供 HTTP 服务,而 containerd 默认会使用 HTTPS 进行连接,导致访问失败。为了解决这个问题可以在 /etc/containerd/config.toml 中配置对应的仓库地址,并显式允许使用 HTTP 协议。

[plugins."io.containerd.grpc.v1.cri".registry]

config_path = ""

[plugins."io.containerd.grpc.v1.cri".registry.auths]

[plugins."io.containerd.grpc.v1.cri".registry.configs]

[plugins."io.containerd.grpc.v1.cri".registry.headers]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."harbor.tanqidi.com"]

endpoint = ["http://harbor.tanqidi.com:80"]

3. 部署 k8s 集群

3.1 配置yum源

cat > /etc/yum.repos.d/k8s.repo <<EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF# 查看所有版本

yum --showduplicates list kubeadm --disablerepo="*" --enablerepo="kubernetes"

# 查看过滤某个版本

[root@k8s-master01 ~]# yum --showduplicates list kubeadm --disablerepo="*" --enablerepo="kubernetes" | grep 1.28.2

kubeadm.x86_64 1.28.2-0 @kubernetes

kubeadm.x86_64 1.28.2-0 kubernetes3.2 安装1.28.2版本

yum -y install kubeadm-1.28.2-0 kubelet-1.28.2-0 kubectl-1.28.2-0

Installing:

kubeadm x86_64 1.28.2-0 kubernetes 11 M

kubectl x86_64 1.28.2-0 kubernetes 11 M

kubelet x86_64 1.28.2-0 kubernetes 21 M

Installing for dependencies:

conntrack-tools x86_64 1.4.4-7.el7 base 187 k

cri-tools x86_64 1.26.0-0 kubernetes 8.6 M

kubernetes-cni x86_64 1.2.0-0 kubernetes 17 M

libnetfilter_cthelper x86_64 1.0.0-11.el7 base 18 k

libnetfilter_cttimeout x86_64 1.0.0-7.el7 base 18 k

libnetfilter_queue x86_64 1.0.2-2.el7_2 base 23 k

socat x86_64 1.7.3.2-2.el7 base 290 k

3.3 配置kubelet

为了实现docker使用的cgroupdriver与kubelet使用的cgroup的一致性,建议修改如下文件内容。

# vi /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS="--cgroup-driver=systemd"

# 设置kubelet为开机自启动即可,由于没有生成配置文件,集群初始化后自动启动

systemctl enable kubelet3.4 初始化集群

[root@k8s-master01 kubelet]# kubeadm config images list

I0720 01:48:41.111331 10165 version.go:256] remote version is much newer: v1.33.3; falling back to: stable-1.28

registry.k8s.io/kube-apiserver:v1.28.15

registry.k8s.io/kube-controller-manager:v1.28.15

registry.k8s.io/kube-scheduler:v1.28.15

registry.k8s.io/kube-proxy:v1.28.15

registry.k8s.io/pause:3.9

registry.k8s.io/etcd:3.5.9-0

registry.k8s.io/coredns/coredns:v1.10.1

# 生成配置文件

kubeadm config print init-defaults > kubeadm-config.yaml

# 编辑一些信息

[root@k8s-master01 k8s-install]# cat kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 172.31.0.111

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/containerd/containerd.sock

imagePullPolicy: IfNotPresent

name: k8s-master01

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: 1.28.2

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16

serviceSubnet: 10.96.0.0/12

scheduler: {}

# 初始化

[root@k8s-master01 k8s-install]# kubeadm init --config kubeadm-config.yaml

[init] Using Kubernetes version: v1.28.2

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master01 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 172.31.0.111]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-master01 localhost] and IPs [172.31.0.111 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-master01 localhost] and IPs [172.31.0.111 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 4.001248 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node k8s-master01 as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node k8s-master01 as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule]

[bootstrap-token] Using token: abcdef.0123456789abcdef

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 172.31.0.111:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:8950b129b45e39eb9d5f11c05d44ab11575cbb7e38c780a15f26d4c2ffc781be

# master 节点执行

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

# 工作节点执行

kubeadm join 172.31.0.111:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:8950b129b45e39eb9d5f11c05d44ab11575cbb7e38c780a15f26d4c2ffc781be 3.5 kube-proxy 配置ipvs(可选)

kubectl -n kube-system get configmap kube-proxy -o yaml

# 编辑configmap

kubectl -n kube-system edit configmap kube-proxy

# 改成ipvs

mode: "ipvs"

# 调用重启容器

kubectl -n kube-system delete pod -l k8s-app=kube-proxy

# 测试是否生效

[root@hybxvdka01 yaml]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.96.0.1:443 rr

-> 10.133.179.71:6443 Masq 1 0 0

TCP 10.96.0.10:53 rr

-> 10.244.55.177:53 Masq 1 0 0

-> 10.244.55.179:53 Masq 1 0 0

TCP 10.96.0.10:9153 rr

-> 10.244.55.177:9153 Masq 1 0 0

-> 10.244.55.179:9153 Masq 1 0 0

TCP 10.102.156.217:5473 rr

-> 10.133.179.71:5473 Masq 1 0 0

TCP 10.104.10.46:80 rr

-> 10.244.55.180:80 Masq 1 0 0

-> 10.244.55.182:80 Masq 1 0 0

-> 10.244.55.183:80 Masq 1 0 0

TCP 10.106.58.116:443 rr

-> 10.244.55.178:5443 Masq 1 0 0

-> 10.244.248.216:5443 Masq 1 0 0

TCP 10.133.179.71:31001 rr

-> 10.244.55.180:80 Masq 1 0 0

-> 10.244.55.182:80 Masq 1 0 0

-> 10.244.55.183:80 Masq 1 0 0

TCP 10.244.55.128:31001 rr

-> 10.244.55.180:80 Masq 1 0 0

-> 10.244.55.182:80 Masq 1 0 0

-> 10.244.55.183:80 Masq 1 0 0

UDP 10.96.0.10:53 rr

-> 10.244.55.177:53 Masq 1 0 0

-> 10.244.55.179:53 Masq 1 0 0 3.6 部署helm(可选)

通常在master节点上进行即可,可以安装在第一台master上。

wget https://get.helm.sh/helm-v3.14.4-linux-amd64.tar.gz

tar -zxvf helm-v3.14.4-linux-amd64.tar.gz

cd linux-amd64

chmod +x helm

cp helm /usr/local/bin/

[root@hybxvdka01 ~]# helm version

version.BuildInfo{Version:"v3.14.4", GitCommit:"81c902a123462fd4052bc5e9aa9c513c4c8fc142", GitTreeState:"clean", GoVersion:"go1.21.9"}4. 部署网络插件

# 部署operator

kubectl create -f https://raw.githubusercontent.com/projectcalico/calico/v3.26.1/manifests/tigera-operator.yaml

# 下载自定义资源

wget https://raw.githubusercontent.com/projectcalico/calico/v3.26.1/manifests/custom-resources.yaml

# vi custom-resources.yaml将内部cidr: 192.168.0.0/16改成我们上面的pod自定义网段10.244.0.0/16

[root@k8s-master01 k8s-install]# cat custom-resources.yaml

# This section includes base Calico installation configuration.

# For more information, see: https://projectcalico.docs.tigera.io/master/reference/installation/api#operator.tigera.io/v1.Installation

apiVersion: operator.tigera.io/v1

kind: Installation

metadata:

name: default

spec:

# Configures Calico networking.

calicoNetwork:

# Note: The ipPools section cannot be modified post-install.

ipPools:

- blockSize: 26

cidr: 10.244.0.0/16

encapsulation: VXLANCrossSubnet

natOutgoing: Enabled

nodeSelector: all()

---

# This section configures the Calico API server.

# For more information, see: https://projectcalico.docs.tigera.io/master/reference/installation/api#operator.tigera.io/v1.APIServer

apiVersion: operator.tigera.io/v1

kind: APIServer

metadata:

name: default

spec: {}

# 应用

kubectl create -f custom-resources.yaml4.1 私有仓库

在 K8s 环境下,应用会先从你指定的镜像仓库拉取镜像,如果没指定的话,就会默认去 docker.io 拉。而且你也可以设置镜像拉取的秘钥。不过在部署 K8s 时,可以先忽略秘钥的配置,先删掉这个项,去 Harbor 上把相关的 Namespace 公开一下。等 K8s 部署好并启动完成后,再到 K8s 里为这些 Deployment 配置一个公共的 imagePullSecrets。

apiVersion: operator.tigera.io/v1

kind: Installation

metadata:

name: default

spec:

variant: Calico

imagePullSecrets:

- name: tigera-pull-secret

registry: harbor.tanqidi.com/k8s4.2 网络模式

已经部署完毕了想查看网络模式可以执行kubectl get ippool -A -o yaml

如果要将网络模式切换为 IPIP,除了修改 custom-resources.yaml 中的配置,将 encapsulation: VXLANCrossSubnet 替换为 ipipMode: Always,还需要删除并重新创建 Calico。为了避免这种情况,最好在部署时就提前确定所需的网络模式。这样可以顺利将 Calico 的网络模式从 VXLAN 切换为 IPIP。

[root@master01 calico]# kubectl get ippool -A -o yaml

apiVersion: v1

items:

- apiVersion: crd.projectcalico.org/v1

kind: IPPool

metadata:

annotations:

projectcalico.org/metadata: '{"uid":"804a10ad-9a56-4e3e-a128-aebcca095174","creationTimestamp":"2025-07-24T12:26:30Z"}'

creationTimestamp: "2025-07-24T12:26:30Z"

generation: 1

name: default-ipv4-ippool

resourceVersion: "24315"

uid: 813ac52f-414e-4e25-ba00-c0ee5f0b9dd0

spec:

allowedUses:

- Workload

- Tunnel

blockSize: 26

cidr: 10.244.0.0/16

ipipMode: Always

natOutgoing: true

nodeSelector: all()

vxlanMode: Never

kind: List

metadata:

resourceVersion: ""x. 修改MTU(可选)

待试验

kubectl patch installation.operator.tigera.io default --type merge -p '{"spec":{"calicoNetwork":{"mtu":1450}}}'5. 验证环境

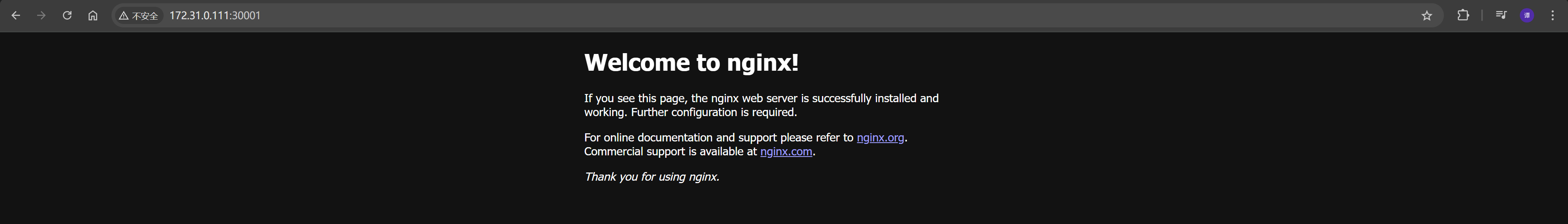

部署了一个使用 nginx:1.22 镜像的应用,运行 3 个副本,并通过 NodePort 方式将容器的 80 端口映射到主机的 30001 端口,便于外部访问;镜像拉取策略设为 IfNotPresent,优先使用本地镜像。

cat > nginx-nodeport.yaml <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- nginx

topologyKey: "kubernetes.io/hostname" # 表示按节点划分

containers:

- name: nginx

image: nginx:1.22

ports:

- containerPort: 80

imagePullPolicy: IfNotPresent

---

apiVersion: v1

kind: Service

metadata:

name: nginx-service

spec:

type: NodePort

selector:

app: nginx

ports:

- port: 80

targetPort: 80

nodePort: 30001

EOF[root@k8s-master01 tmp]# kubectl get po -A -owide |grep -v kubesphere

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

calico-apiserver calico-apiserver-67c976f899-nlf84 1/1 Running 0 46m 10.244.79.68 k8s-worker01 <none> <none>

calico-apiserver calico-apiserver-67c976f899-qshb5 1/1 Running 0 46m 10.244.69.195 k8s-worker02 <none> <none>

calico-system calico-kube-controllers-5cdb789774-t2pb7 1/1 Running 0 47m 10.244.79.65 k8s-worker01 <none> <none>

calico-system calico-node-5nnmp 1/1 Running 0 47m 172.31.0.112 k8s-worker01 <none> <none>

calico-system calico-node-mxcjb 1/1 Running 0 47m 172.31.0.113 k8s-worker02 <none> <none>

calico-system calico-node-s7crq 1/1 Running 0 47m 172.31.0.111 k8s-master01 <none> <none>

calico-system calico-typha-6875fc4854-nt9c2 1/1 Running 0 47m 172.31.0.111 k8s-master01 <none> <none>

calico-system calico-typha-6875fc4854-rhdl4 1/1 Running 1 (47m ago) 47m 172.31.0.113 k8s-worker02 <none> <none>

calico-system csi-node-driver-qhxdm 2/2 Running 0 47m 10.244.32.129 k8s-master01 <none> <none>

calico-system csi-node-driver-wkfwh 2/2 Running 0 47m 10.244.79.67 k8s-worker01 <none> <none>

calico-system csi-node-driver-z4t6z 2/2 Running 0 44m 10.244.69.196 k8s-worker02 <none> <none>

default nginx-deployment-67856bc4f5-249kk 1/1 Running 0 40m 10.244.69.198 k8s-worker02 <none> <none>

default nginx-deployment-67856bc4f5-rrrcl 1/1 Running 0 40m 10.244.69.197 k8s-worker02 <none> <none>

default nginx-deployment-67856bc4f5-vlf9d 1/1 Running 0 40m 10.244.79.69 k8s-worker01 <none> <none>

kube-system coredns-66f779496c-bq7dx 1/1 Running 0 47m 10.244.79.66 k8s-worker01 <none> <none>

kube-system coredns-66f779496c-wfwjj 1/1 Running 0 47m 10.244.69.193 k8s-worker02 <none> <none>

kube-system etcd-k8s-master01 1/1 Running 0 47m 172.31.0.111 k8s-master01 <none> <none>

kube-system kube-apiserver-k8s-master01 1/1 Running 0 47m 172.31.0.111 k8s-master01 <none> <none>

kube-system kube-controller-manager-k8s-master01 1/1 Running 0 47m 172.31.0.111 k8s-master01 <none> <none>

kube-system kube-proxy-6kz5k 1/1 Running 0 47m 172.31.0.111 k8s-master01 <none> <none>

kube-system kube-proxy-rhn7q 1/1 Running 0 47m 172.31.0.112 k8s-worker01 <none> <none>

kube-system kube-proxy-x9hqq 1/1 Running 0 47m 172.31.0.113 k8s-worker02 <none> <none>

kube-system kube-scheduler-k8s-master01 1/1 Running 0 47m 172.31.0.111 k8s-master01 <none> <none>

kube-system snapshot-controller-0 1/1 Running 0 31m 10.244.79.72 k8s-worker01 <none> <none>

tigera-operator tigera-operator-94d7f7696-5z5wf 1/1 Running 0 47m 172.31.0.111 k8s-master01 <none> <none>

[root@k8s-master01 tmp]# kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-controller-manager-svc ClusterIP None <none> 10257/TCP 33m

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 113m

kube-scheduler-svc ClusterIP None <none> 10259/TCP 33m

kubelet ClusterIP None <none> 10250/TCP,10255/TCP,4194/TCP 33m

[root@k8s-master01 tmp]# dig -t a tanqidi.com @10.96.0.10

; <<>> DiG 9.11.4-P2-RedHat-9.11.4-26.P2.el7_9.16 <<>> -t a tanqidi.com @10.96.0.10

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 45406

;; flags: qr rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;tanqidi.com. IN A

;; ANSWER SECTION:

tanqidi.com. 30 IN A 193.112.95.180

;; Query time: 39 msec

;; SERVER: 10.96.0.10#53(10.96.0.10)

;; WHEN: Sun Jul 20 03:56:21 CST 2025

;; MSG SIZE rcvd: 67

6. 高可用

6.1 配置hosts

172.31.0.111 k8s-master01

172.31.0.112 k8s-master02

172.31.0.113 k8s-master03

# vip

172.31.0.100 vip.k8s.local6.2 配置keepalived

6.2.1 master-01

cat >/etc/keepalived/keepalived.conf<<EOF

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass D0ECF873-977D-4522-83A9-EDA75DE66228

}

unicast_src_ip 172.31.0.111

unicast_peer {

172.31.0.112

172.31.0.113

}

virtual_ipaddress {

172.31.0.100

}

}

EOF6.2.2 master-02

cat >/etc/keepalived/keepalived.conf<<EOF

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass D0ECF873-977D-4522-83A9-EDA75DE66228

}

unicast_src_ip 172.31.0.112

unicast_peer {

172.31.0.111

172.31.0.113

}

virtual_ipaddress {

172.31.0.100

}

}

EOF6.2.3 master-03

cat >/etc/keepalived/keepalived.conf<<EOF

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass D0ECF873-977D-4522-83A9-EDA75DE66228

}

unicast_src_ip 172.31.0.113

unicast_peer {

172.31.0.111

172.31.0.112

}

virtual_ipaddress {

172.31.0.100

}

}

EOF6.2.4 启动keepalived

systemctl enable --now keepalived6.3 haproxy

yum install -y haproxy

haproxy因为是部署在3个master上的api-server已经使用了6443端口了,监听端口就使用16443,三个master节点都需要操作。

cat >/etc/haproxy/haproxy.cfg<<"EOF"

global

maxconn 2000

ulimit-n 16384

log 127.0.0.1 local0 err

stats timeout 30s

defaults

log global

mode http

option httplog

timeout connect 5000

timeout client 50000

timeout server 50000

timeout http-request 15s

timeout http-keep-alive 15s

frontend monitor-in

bind *:33305

mode http

option httplog

monitor-uri /monitor

frontend k8s-master

bind 0.0.0.0:16443

bind 127.0.0.1:16443

mode tcp

option tcplog

tcp-request inspect-delay 5s

default_backend k8s-master

backend k8s-master

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server k8s-master01 172.31.0.111:6443 check

server k8s-master02 172.31.0.112:6443 check

server k8s-master03 172.31.0.113:6443 check

EOF

# 启动

systemctl enable --now haproxy6.4 重置k8s

kubeadm reset -f

rm -rf /etc/kubernetes/

rm -rf ~/.kube/

rm -rf /var/lib/kubelet/

rm -rf /var/lib/etcd/

# 清空所有journal的日志

journalctl --rotate

journalctl --vacuum-time=1s6.5 初始化集群

control-plane-endpoint要使用虚拟VIP的域名和16443负载均衡haproxy

kubeadm init \

--control-plane-endpoint "vip.k8s.local:16443" \

--upload-certs \

--apiserver-advertise-address=172.31.0.111 \

--apiserver-bind-port=6443 \

--pod-network-cidr=10.244.0.0/16 \

--service-cidr=10.96.0.0/12 \

--image-repository=registry.aliyuncs.com/google_containers \

--kubernetes-version=1.28.2 \

--node-name=k8s-master01

# 自行执行命令将其他节点以master身份加入。# 去掉污点

kubectl taint nodes k8s-master01 node-role.kubernetes.io/control-plane-

kubectl taint nodes k8s-master02 node-role.kubernetes.io/control-plane-

kubectl taint nodes k8s-master03 node-role.kubernetes.io/control-plane-优秀贴文

x. 写在最后

如果你的机器无法访问 docker.io 或其他海外镜像仓库,建议使用阿里云的镜像源 registry.aliyuncs.com/google_containers 作为替代,以加快镜像拉取速度并避免网络问题。如果你希望保持官方源的体验,也可以通过为 containerd 配置代理的方式,让其直接访问海外仓库,体验原始的镜像拉取流程。

使用 Kubeadm 部署 Kubernetes v1.28.x 部署流程

https://tanqidi.com/archives/35e71990-6711-4c54-b8e0-887e6940682b

评论